Databricks has added a Lakebase Postgres database layer to its lakehouse, enabling AI apps and agents to run analytics on operational data within the Databricks environment. It also introduced Agent Bricks, a tool for automated AI agent development.

The data warehouser and analyzer held its annual Data + AI Summit and investor session in San Francisco, where these and numerous other announcements were made.

Ali Ghodsi, co-founder and CEO of Databricks, stated: “We’ve spent the past few years helping enterprises build AI apps and agents that can reason on their proprietary data with the Databricks Data Intelligence Platform. Now, with Lakebase, we’re creating a new category in the database market: a modern Postgres database, deeply integrated with the lakehouse and today’s development stacks. As AI agents reshape how businesses operate, Fortune 500 companies are ready to replace outdated systems. With Lakebase, we’re giving them a database built for the demands of the AI era.”

The Lakebase, powered by Neon technology with separated compute and storage, is a fully managed, Postgres-compatible database that enables seamless data loading and transformation from over 300 sources. Databricks says “operational databases (OLTP) are a $100-billion-plus market that underpin every application. However, they are based on decades-old architecture designed for slowly changing apps, making them difficult to manage, expensive and prone to vendor lock-in.” But “now, every data application, agent, recommendation and automated workflow needs fast, reliable data at the speed and scale of AI agents.”

Operational and analytical systems have to converge to reduce latency between AI systems and to provide enterprises with current information to make real-time decisions. Lakebase stores operational data in low-cost data lakes, “with continuous autoscaling of compute to support agent workloads.”

William Blair analyst Jason Ader told subscribers Databricks management unveiled its evolving product roadmap at this San Francisco event in a three-chapter framework:

- Chapter 1 is focused on establishing the lakehouse as a foundational architecture.

- Chapter 2 embeds AI into the platform to broaden access to data insights.

- Chapter 3, the highlight of this year’s summit, introduces a new architectural category called a Lakebase, a transactional layer designed to support operational use cases natively within the lakehouse.

Ader said this positions Databricks to unify the entire data stack across analytical, AI, and transactional workloads. Databricks intends to offer seamless capabilities across three components of future enterprise applications: databases, analytics, and AI. In Ader’s view, at a time when enterprises are prioritizing architectural simplicity, governance, and AI enablement, Databricks’ expanding platform strategy appears well aligned with customer demand, though time will tell whether customers want this level of tight integration.

Databricks’ Agent Bricks feature automates the creation of tailored AI agents, and democratizes agentic AI development, enabling business users to create their own agents. Databricks says it automatically generates tailored synthetic data, so that businesses can bypass the traditional trial-and-error cycle, without the need to piece together multiple tools or retrain staff.

Databricks Managed Iceberg Tables were launched in Public Preview mode at the Data + AI Summit. They offer full support for the Apache Iceberg REST Catalog API. This allows external engines, such as Apache Spark, Flink, and Kafka, to interoperate with tables governed by Unity Catalog – Databricks’ central metadata management facility. Managed Iceberg Tables provide automatic performance optimizations, which, Databricks claims, deliver cost-efficient storage and lightning-fast queries out of the box.

Informatica, which is being acquired by Salesforce, is a launch partner for Databricks’ Managed Iceberg Tables and the Lakebase. It says Managed Iceberg Tables allow Informatica customers to ingest, cleanse, govern, and transform Iceberg-format data at enterprise scale, within the Databricks Data Intelligence Platform.

Informatica is also introducing new capabilities aimed at accelerating the adoption of AI agents and GenAI on Mosaic AI, Databricks’ suite of AI offerings that help enterprises build and deploy AI agent systems. These include:

- Mosaic AI connectors for Cloud Application Integration (CAI): Rapidly deployable AI agents that integrate enterprise data with Mosaic AI through a no-code interface.

- GenAI Recipes for CAI: Pre-configured templates that simplify and speed up GenAI application development and deployment.

PuppyGraph, a real-time, zero-ETL graph query engine supplier, also announced native integration with the Managed Iceberg Tables. This allows customers to run complex graph queries directly on Iceberg Tables governed by Unity Catalog with no data movement and no ETL pipelines.

By combining PuppyGraph’s in-place graph engine with Managed Iceberg Tables, customers can:

- Query massive Iceberg datasets as a live graph, in real-time.

- Use graph traversal to detect fraud, lateral movement, and network paths.

- Perform Root Cause Analysis on telemetry data using service relationship graphs.

- Eliminate the need for ETL into siloed graph databases.

- Scale analytics across petabytes with minimal operational overhead.

Databricks announced two major partnerships. One is with Microsoft with a multi-year extension of the existing strategic Azure Databricks partnership. This means tighter native integration across Azure AI Foundry, Power Platform and the upcoming SAP Databricks, wrapped in a shared ambition to power “AI-native” enterprises. The second is with Google Cloud. Gemini 2.5 models (Pro + Flash) will now be available natively inside Databricks. Customers can run Gemini models directly on their enterprise data (via SQL or endpoints), with built-in governance, no data movement, and billing through their Databricks contract. It includes access to Deep Think mode.

In other announcements it unveiled a $100m investment in AI education to address the talent gap and train the professionals of the future. Students, educators and aspiring data professionals will receive access to the Databricks Free Edition along with a range of training resources.

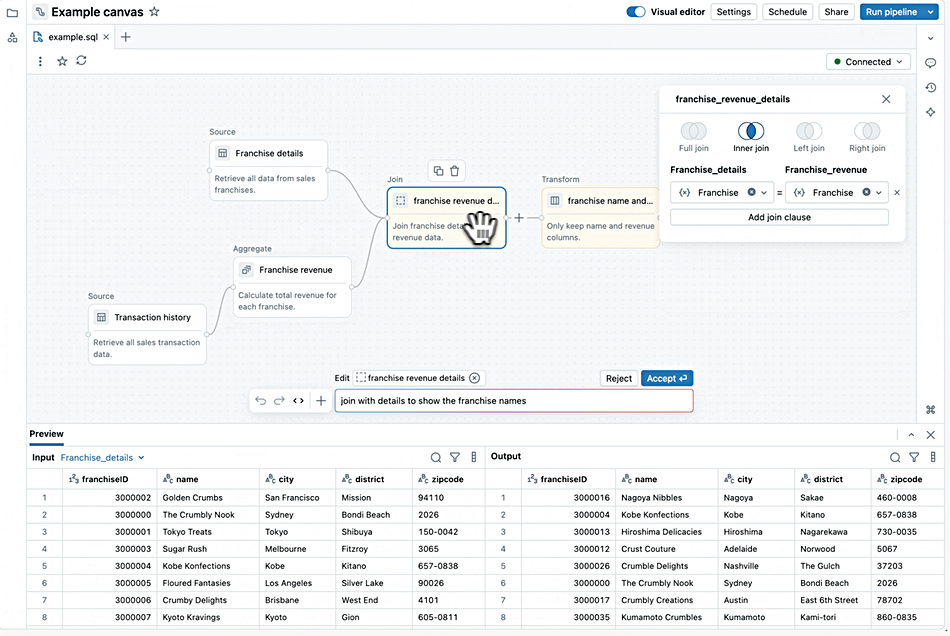

Databricks announced the upcoming Preview of Lakeflow Designer, a new no-code ETL capability letting non-technical users author production data pipelines using a visual drag-and-drop interface and a natural language GenAI assistant. Ghodsi said: “Lakeflow Designer makes it possible for more people in an organization to create production pipelines so teams can move from idea to impact faster.”

The company launched Databricks One to enable business users to interact with AI/BI dashboards, ask their data questions in natural language through AI/BI Genie powered by deep research, find relevant dashboards and use custom-built Databricks apps, in an elegant, code-free environment built for their needs. This marks, it said, a major evolution to extend data intelligence beyond technical users to business teams across the enterprise.

Databricks announced that Synapxe, Singapore’s national health tech agency, is using Databricks to power its Health Empowerment through Advanced Learning & Intelligent eXchange (HEALIX), a comprehensive cloud-based analytics platform for the entire public healthcare sector. Both organisations have also signed a Memorandum of Understanding (MoU), marking Databricks’ first MoU with a public healthcare organisation in Singapore, to unlock healthcare innovation and train data and AI talent within the country’s healthcare industry.

Databricks told financial analysts it expects to reach $3.7 billion in annualized revenue by July with 50 percent year-on-year growth. It made $2.6 billion in its fiscal 2025 ending in January 2025 and was close to being cash flow-positive. The company wants to hire 3,000 people altogether in 2025, with its current headcount >8,000. No IPO date has been officially set.

Lakebase is available in Public Preview, with additional, significant improvements planned over the coming months. To learn more, read a “What is a Lakebase?” blog. Lakeflow Designer will be entering Private Preview shortly. Databricks One will be available in public beta later this summer, with a “consumer access” entitlement available today.