DDN says it has secured the top position against its competitors on the IO500 benchmark.

The IO500 rates HPC (High-Performance Computing) storage systems with a single score reflecting their sequential read/write bandwidth, file metadata operations (file creation, delete, and lookup), and searching for files in a directory tree. There is a 10-node score for small HPC systems and a production score for unlimited node setups. DDN says that most enterprise AI workloads run in the 10-node category and there it is number one – at least when it comes to the benchmark – claiming it is outperforming competitors “by as much as 11x.”

DDN co-founder and CEO Alex Bouzari stated: “AI is transforming every industry, and the organizations leading that transformation are the ones that understand infrastructure performance is not optional – it’s mission-critical. Being ranked #1 on the IO500 benchmark is more than a technical achievement – it’s proof that our customers can count on DDN to deliver the speed, scale, and reliability needed to turn data into competitive advantage. DDN is not just ahead. We have left the competition behind.”

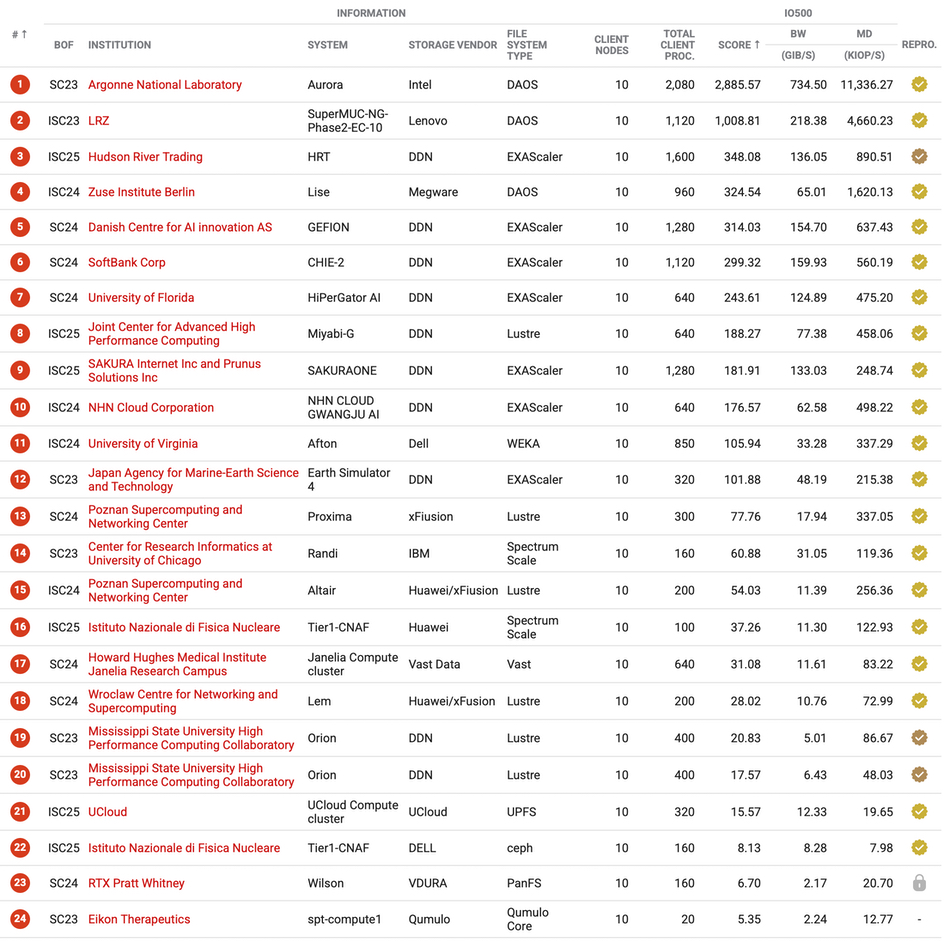

The IO500 results are published twice yearly at major HPC conferences (SC and ISC) and the latest 10-node results can be seen here. They are ranked by institution and the top 24 are shown in a table:

The DAOS storage system used at Argonne and LRZ gets the top two slots, 2,885.57 and 1,008.81 respectively, with Hudson River Trading getting the number three slot with its DDN EXAScaler system scoring 348.08.

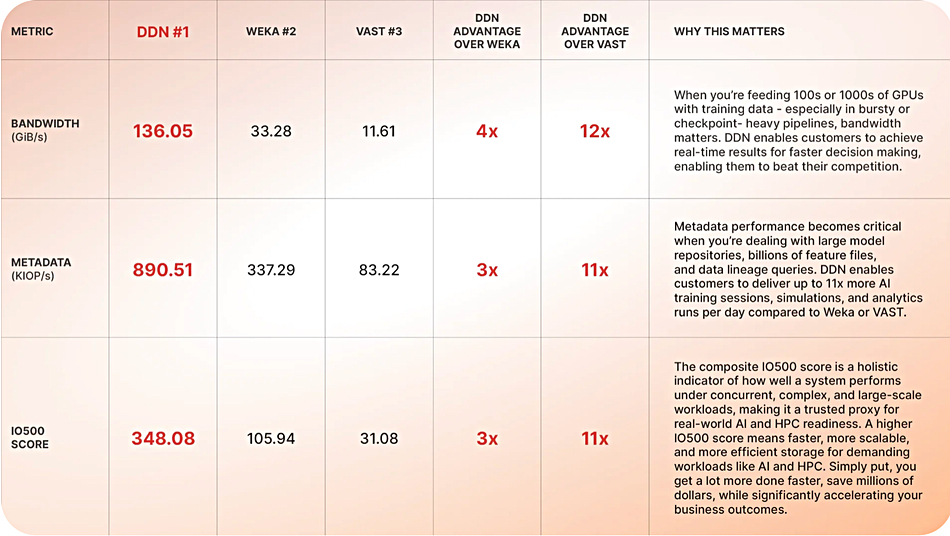

WEKA appears at number 11 with a University of Virginia system scoring 105.94, while VAST enters at the number 17 slot with a 31.08 score from a Howard Hughes Medical Institute (Janelia Research Campus) system. DDN has separated out its own results, as well as those of rivals WEKA and VAST, in its own table:

As DDN’s release says it has “secured the #1 position against its competitors” on this list, it apparently does not rate DAOS systems as being competition for its EXAScaler and Lustre products.

DDN says the IO500 is “the gold standard for assessing real-world storage performance in AI and HPC environments.”

We should note that the IO500 is a file-oriented benchmark and not an AI benchmark. Were there to be such an AI-focused benchmark, it is not a given that IO500 results would be reflected one for one.

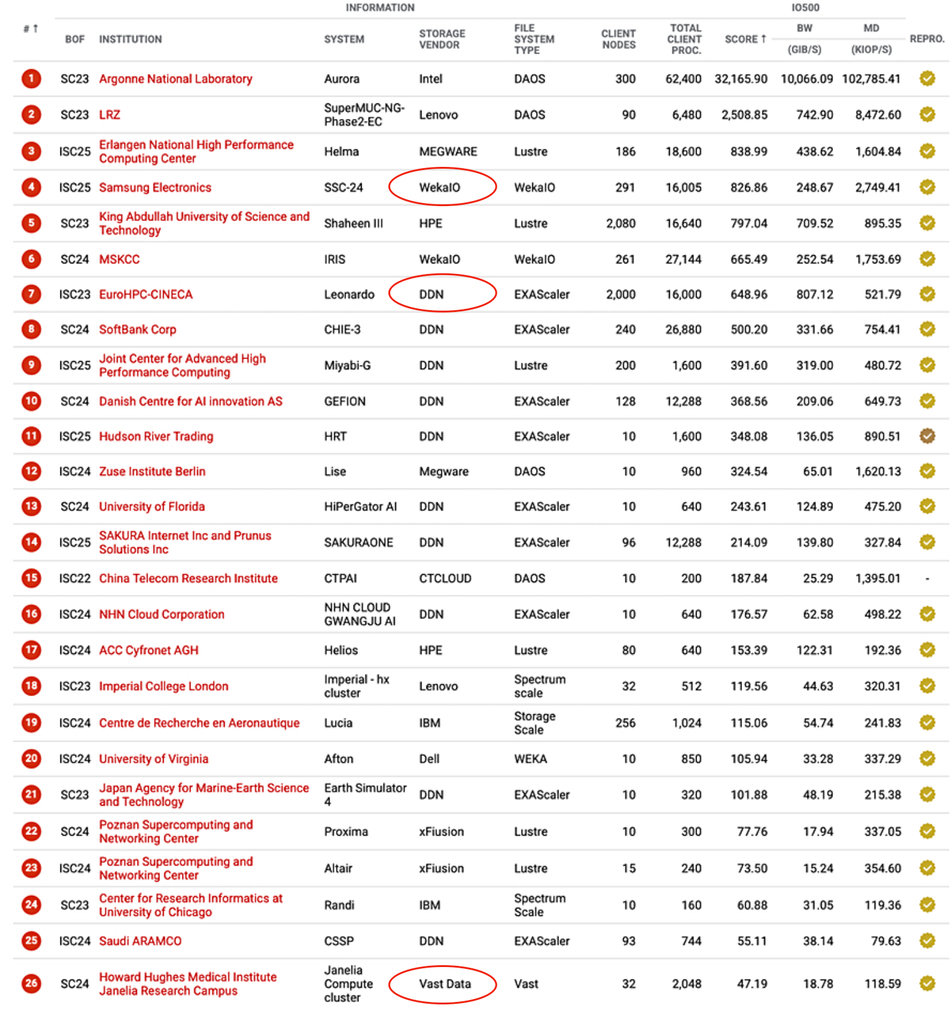

Full IO500 production List

The full production IO500 list, with no 10-node restriction, is rather different from the 10-node results, as you can see:

DAOS is still in the top two slots. WEKA appears in the number four slot, scoring 1826.86, with DDN behind it at number seven with 648.96. VAST is in the number 26 position with 47.19.

Competitors

We asked WEKA, VAST, and Hammerspace if they have any comment on the IO500 results and DDN’s claims and will add them when they are received.

WEKA told us: “DDN’s recent IO500 claims deserve a closer look. They rank behind DAOS in the 10 Node Production list, and WEKA outperforms them in the broader Production category with a top score of 826.86, compared to DDN’s best-ever result of 648.96. These results, recently published by Samsung Electronics using WEKA, are publicly available on the official IO500 list and are clear, irrefutable, and independently validated results.

“The numbers speak for themselves:

- Higher Overall Score: WEKA delivered an IO500 score of 826.86, achieved with only ~1.5 PiB of usable capacity and 291 client nodes—a fraction of the 42 PiB and ~2,000 nodes DDN used for a significantly lower score of 648.96.

- 5× Metadata Advantage: WEKA delivered ~2.75 million metadata IOPS, compared to DDN’s ~520,000, a 5× performance advantage—critical for metadata-heavy workloads such as AI training and genomics.

- Over 2× Total IOPS: Combining bandwidth and metadata, WEKA achieved ~3 million total IOPS, more than 2× the total I/O capacity of DDN’s configuration (~1.45 million IOPS).

- More Efficiency with Less Hardware: WEKA achieved higher performance with dramatically fewer resources—underscoring the architectural efficiency of WEKA’s architecture.

“In contrast, we believe DDN’s most recent production configuration is not reflective of real-world environments: a single metadata server with 32 MDTs, no storage servers, and 0 PiB of usable capacity. The result is even flagged by IO500 as having “Limited Reproducibility.”

“WEKA purpose-built its proprietary architecture to eliminate the need for special tuning or artificial configurations. Our performance scales automatically, consistently and predictably, and our results are reproducible, balanced, and representative of what our real-world customers run in production.”

Our understanding of the VAST point of view is that IO500 is a synthetic benchmark that does not resemble real-world workloads. The data is normalized to there being 10 clients for each test, not to storage system size or client configuration. This leads to skewed systems. For example, the DDN cluster connected had 1600 cores vs 640 cores for the VAST setup.

VAST might say that customers submit IO500 results as a form of bragging. They tune systems like Lustre, etc., that are highly tunable to get the best results. VAST systems don’t offer or need that kind of tuning. Fundamentally, storage performance is a function of how many SSDs a system has. Bigger systems are faster, and if you compare system performance without normalizing to system size in PB or number of SSDs, you’re not comparing an apple to an orange; you’re comparing a bushel of apples with a truckload of oranges.

From VAST’s viewpoint organizations like MLCommons and its MLPerf forbid non-standard comparisons like this, by using their Closed and Open testing schedules. This disincentivizes this, in its view, skewed kind of benchmark warfare.

Hammerspace Global Marketing Head Molly Presley told us: “Hammerspace has already demonstrated linear, efficient scalability in the IO500 10-node challenge and in the MLPerf Storage benchmark. These results show that Hammerspace performs on par [with] proprietary parallel file systems like Lustre—while also being easier to deploy and better aligned with the needs of enterprise IT. We have not focused on “hero runs” optimized for a single, narrow benchmark submission, but rather on realistic, scalable configurations that matter to customers.

“Few organizations today are eager to deploy yet another proprietary storage silo just to enable AI or boost performance. What they need are data-centric solutions that provide full visibility and seamless access to their data—wherever it lives. Hammerspace delivers the ability to centrally manage a virtualized cloud of data across their entire estate. Whether on-premises, in the public cloud, or in hybrid environments, organizations gain unified control with intelligent, policy-driven automation for data placement, access, and governance. The result is unmatched agility, efficiency, and simplicity—without compromising performance. The future of AI data infrastructure is open, standards-based, and spans environments. That’s the world Hammerspace is building.”

Bootnote

In the IO500 “10-Node Research” category, not the production category, Hammerspace announced it “delivered 2X the IO500 10-node challenge score and 3X the bandwidth of VAST—using just nine nodes compared to VAST’s 128. This results in higher performance with a fraction of the hardware, power, and infrastructure complexity to deploy and manage. Hammerspace ranked in the Top 10 highest IOEasy Write and IOEasy Read score in the 10-node challenge.” It’s comparing its score in the 10-node research list to that of VAST in the 10-node production list. The IO500 benchmark suite is identical for both the 10-node Production and Research lists. Read more in a blog here.