Kioxia has tweaked its AiSAQ SSD-based vector search by enabling admins to vary vector index capacity and search performance for different workloads.

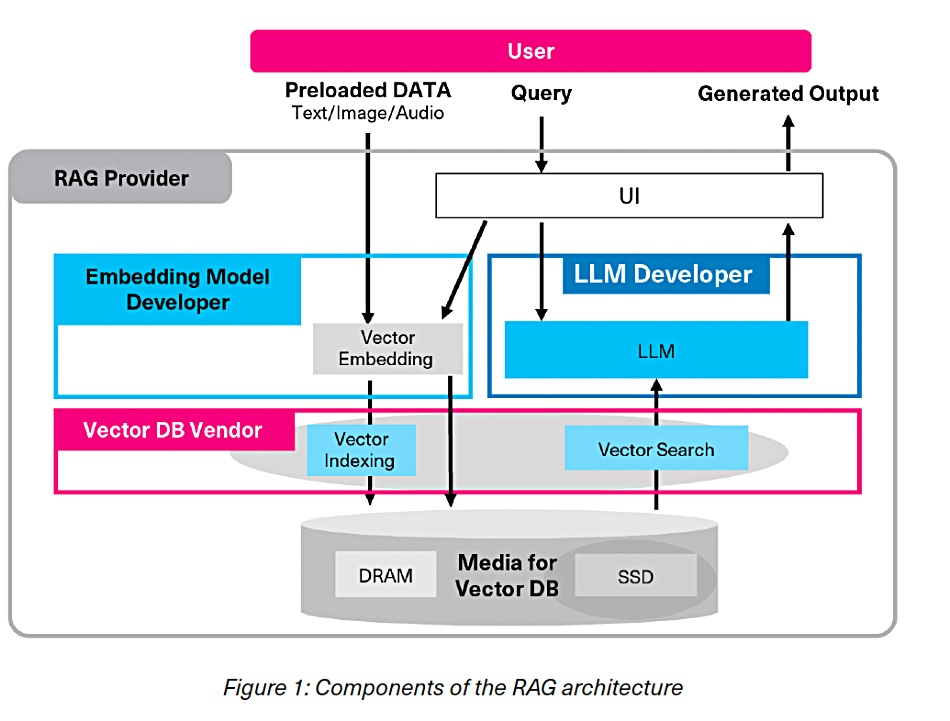

RAG (retrieval-augmented generation) is an AI large language model (LLM) technique in which an organization’s proprietary data is used to make the LLM’s responses more accurate and relevant to a user’s requests. It relies on processing that information into multi-dimensional vectors – numerical representations of its key aspects – that are mapped into a vector space, where similar vectors are positioned closer together than dissimilar ones. An LLM looks into this space during its response generation in a process called semantic search to find similar vectors to its input requests, which have also been vectorized.

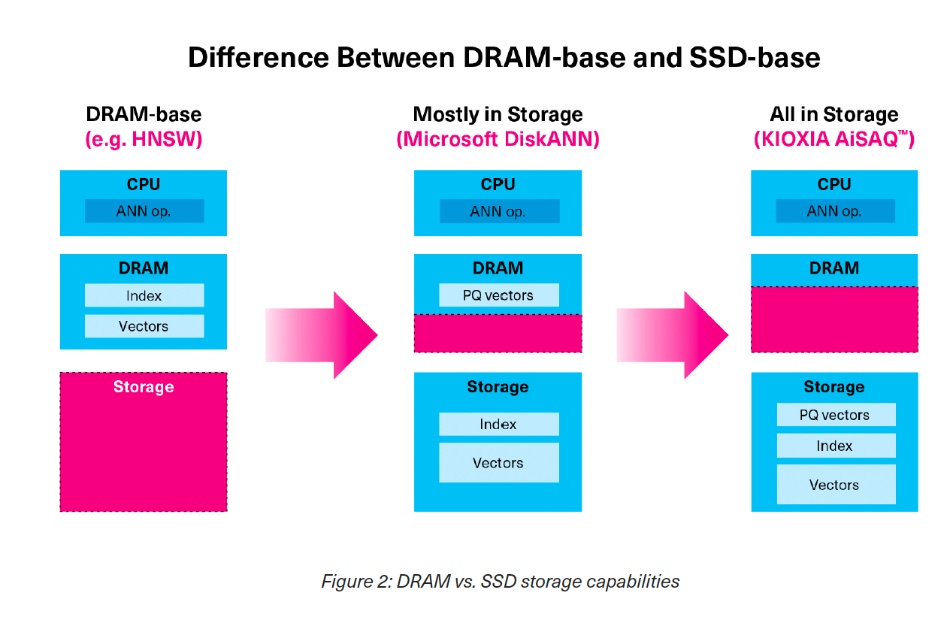

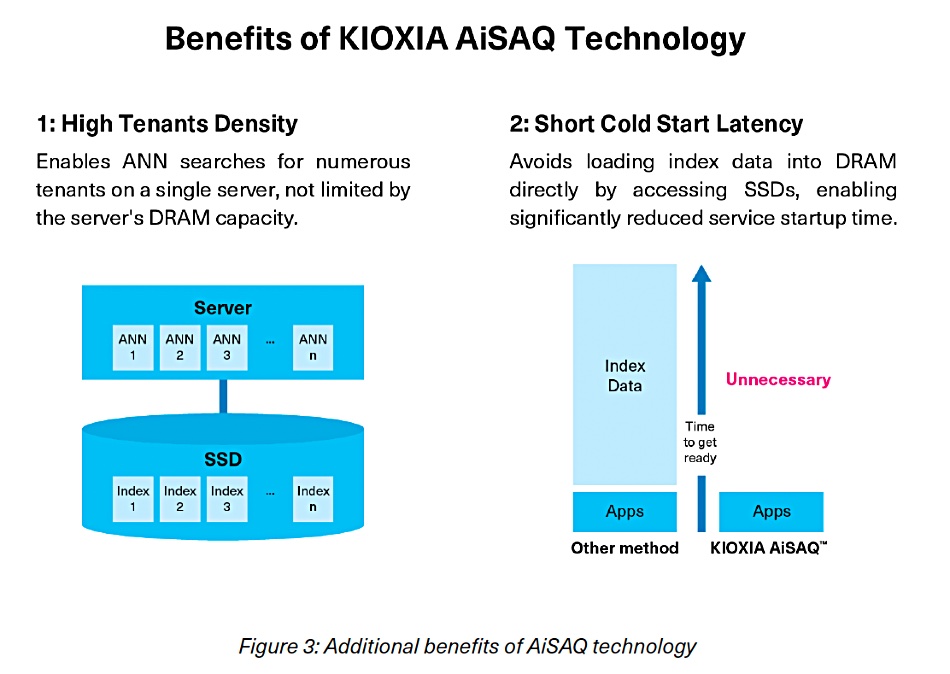

AiSAQ (All-in-Storage ANNS with Product Quantization) software has the ANNS (Approximate Nearest Neighbor Search), used for semantic searches across vector indices, carried out in an SSD instead of in DRAM, thus saving on DRAM occupancy and increasing the size of a searched vector index set beyond DRAM capacity limits to the capacity of a set of SSDs. Kioxia has updated the open source AiSAQ software to enable search performance to be tuned in relation to the size of the vector index.

Neville Ichaporia, Kioxia America SVP and GM of its SSD business unit, stated: “With the latest version of Kioxia AiSAQ software, we’re giving developers and system architects the tools to fine-tune both performance and capacity.”

As the number of vectors grows, increasing search performance (queries per second) requires more SSD capacity per vector – which is limited by the system’s installed SSD capacity. This results in a smaller number of vectors. Conversely, to maximize the number of vectors, SSD capacity consumption per vector needs to be reduced, which results in lower performance. The optimal balance between these two opposing conditions varies depending on the specific workload.

The new release enables admins to select the balance for a variety of contrasting workloads among the RAG system, without altering the hardware. Kioxia says its update makes AiSAQ technology a suitable SSD-based ANNS for other vector-hungry applications such as offline semantic searches, as well as RAG. SSDs with capacities of 122 TB or more may be particularly well-suited for large-scale RAG operations.

You read the scientific paper explaining AiSAQ here, and download AiSAQ open source software here.