Meta is eyeing a massive AI datacenter expansion program with the chosen storage suppliers set for a bonanza.

CEO Mark Zuckerberg announced on Facebook: “We’re going to invest hundreds of billions of dollars into compute to build superintelligence. We have the capital from our business to do this,” referring to Meta Superintelligence Labs.

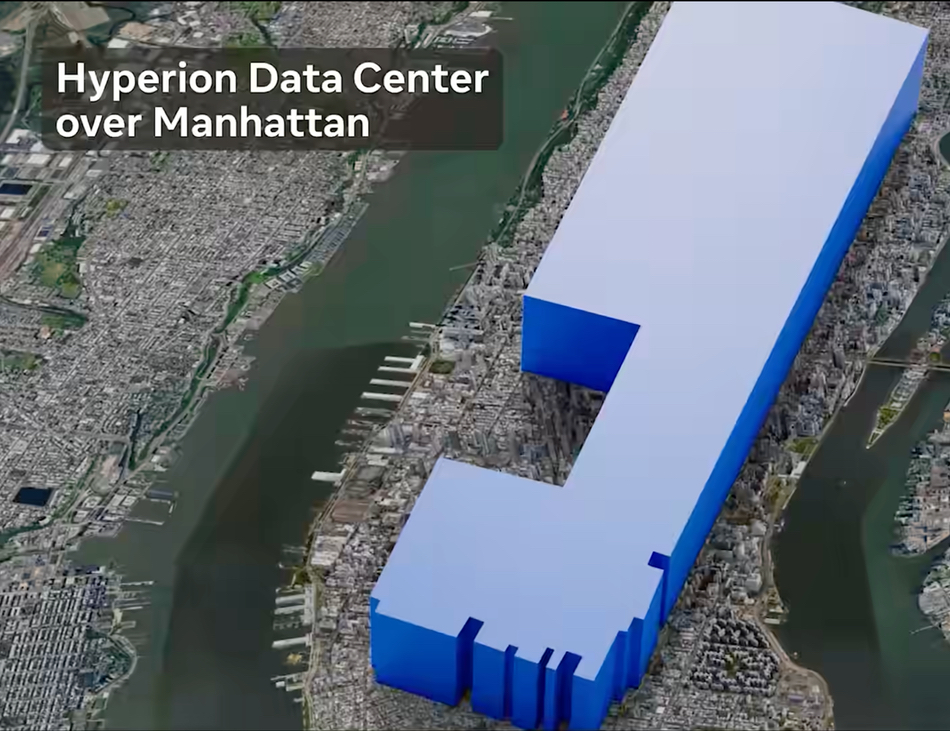

“SemiAnalysis just reported that Meta is on track to be the first lab to bring a 1 GW-plus supercluster online. We’re actually building several multi-GW clusters. We’re calling the first one Prometheus and it’s coming online in ’26. We’re also building Hyperion, which will be able to scale up to 5 GW over several years. We’re building multiple more titan clusters as well. Just one of these covers a significant part of the footprint of Manhattan.”

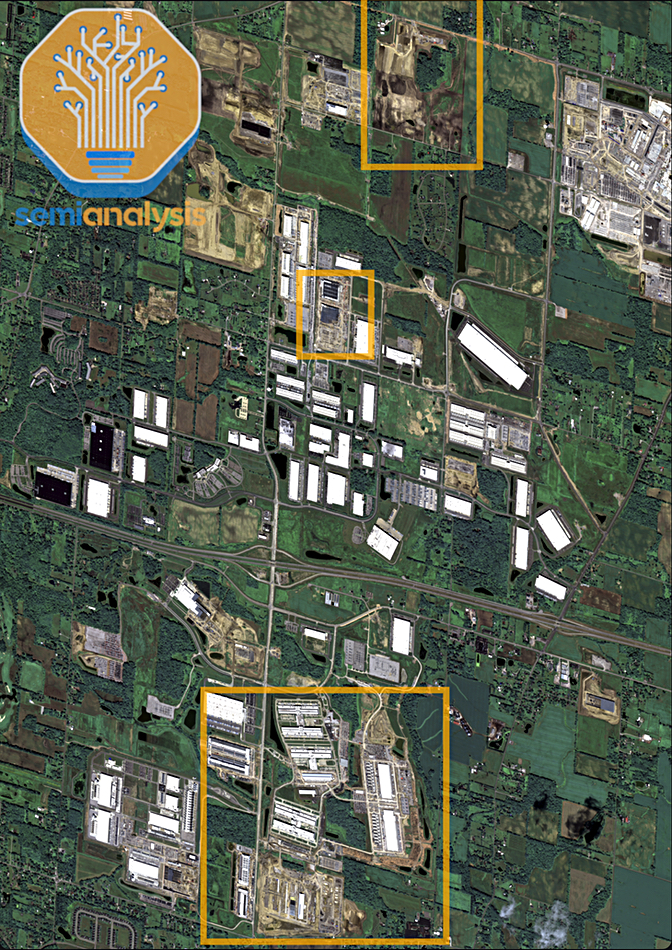

The SemiAnalysis post says Meta is involved in “the pursuit of Superintelligence” and its Prometheus AI training cluster will have 500,000 GPUs, draw 1,020 MW in electricity, and have 3,171,044,226 TFLOPS of performance. This Prometheus cluster is in Ohio and has three sites linked by “ultra-high-bandwidth networks all on one back-end network powered by Arista 7808 Switches with Broadcom Jericho and Ramon ASICs.” There are two 200 MW on-site natural gas plants to generate the electrical power needed.

SemiAnalysis talks about a second Meta AI frontier cluster in Louisiana, “which is set to be the world’s largest individual campus by the end of 2027, with over 1.5 GW of IT power in phase 1. Sources tell us this is internally named Hyperion.”

Datacenter Dynamics similarly reports that “Meta is also developing a $10 billion datacenter in Richland Parish, northeast Louisiana, known as Hyperion. First announced last year as a four million-square-foot campus, it is expected to take until 2030 to be fully built out. By the end of 2027, it could have as much as 1.5 GW of IT power.”

Zuckerberg says: “Meta Superintelligence Labs will have industry-leading levels of compute and by far the greatest compute per researcher. I’m looking forward to working with the top researchers to advance the frontier!” These Superintelligence Labs will then be based on the 1 GW Ohio Prometheus cluster with 500,000 GPUs, the Hyperion cluster in Louisiana with 1.5 GW of power, and by extrapolation 750,000 GPUs in its Phase 1, and “multiple more titan clusters as well.”

Assuming three additional clusters, each with a Prometheus-Hyperion level of power, we arrive at a total of between 2.75 and 3 million GPUs. How much storage will they need?

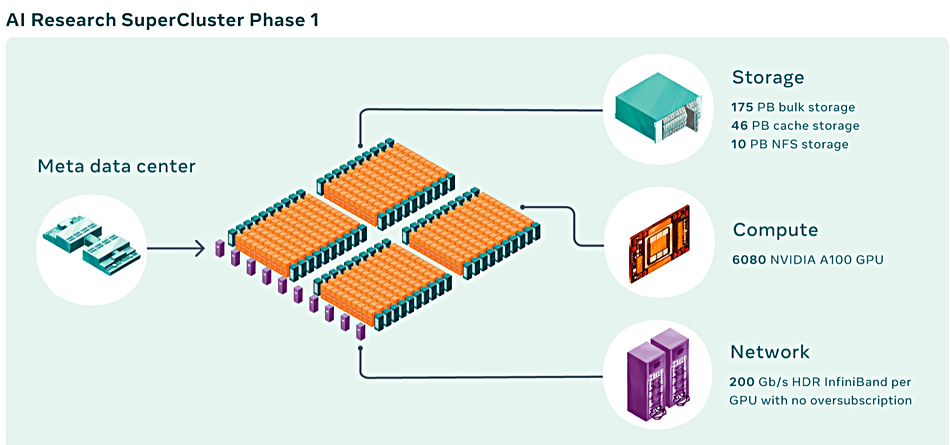

In January 2022, a Meta AI Research SuperCluster (RSC) had 760 Nvidia DGX A100 systems as its compute nodes for a total of 6,080 GPUs. These needed 10 PB of Pure FlashBlade storage, 46 PB of Penguin Computing Altus cache storage, and 175 PB of Pure FlashArray storage:

That’s 185 PB of Pure storage in total for 6,080 GPUs – 30.4 TB/GPU. On completion, this AI RSC’s “storage system will have a target delivery bandwidth of 16 TBps and exabyte-scale capacity to meet increased demand.”

Subsequent to this, Pure announced in April that Meta was a customer for its proprietary Direct Flash Module (DFM) drive technology, licensing hardware and software IP. Meta would buy in its own NAND chips and have the DFMs manufactured by an integrator. Pure CEO Charlie Giancarlo said at the end of 2024: “We expect early field trial buildouts next year, with large full production deployments, on the order of double-digit exabytes, expected in calendar 2026.”

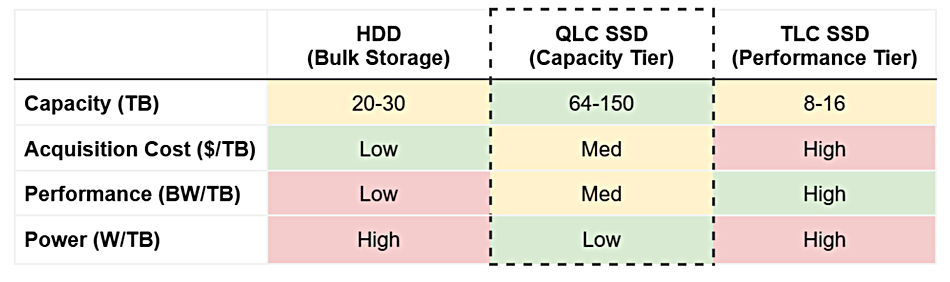

A March 2024 Meta blog discussed three tiers of Meta datacenter storage:

- HDD – the lowest performance and the bulk data tier with 20-30 TB/drive

- QLC SSD – the capacity tier and medium performance with 64-150 TB/drive

- TLC SSD – the performance tier with 8-16 TB/drive

Meta will, we can assume, be buying both HDD and flash storage for its AI superclusters, with Pure Storage IP being licensed for the flash part and NAND chips bought from suppliers such as Kioxia and Solidigm. How much flash capacity? Training needs could be 1 PB per 2,000 GPUs, while inference could need 0.1 to 1 TB per GPU, so halve the difference and say another 1 PB per 2,000 GPUs.

We don’t know the mix of training and inference workloads on Meta’s AI superclusters and can’t make any realistic assumptions here. But the total flash capacity could approach 1.5 EB. We have asked Pure Storage about its role in these AI superclusters, but it had no comment to make.

Meta is also a Hammerspace Global Data Environment software customer for its AI training systems. We have asked Hammerspace if it is involved with this latest supercluster initiative and Molly Presley, Head of Global Marketing, told us: “Hammerspace is very involved in many architectural discussions and production environments at Meta. Some in data center and some in cloud / hybrid cloud. I am not able to comment on if Meta has finalized their data platform or storage software plans for these superclusters though.”

The disk drives will surely come from Seagate and Western Digital. Seagate has said two large cloud computing businesses had each bought 1 EB of HAMR disk drive storage, without identifying Meta.

Two more considerations. First, the 3 million or so GPUs Meta will be buying will have HBM memory chips, meaning massive orders for one or more HBM suppliers such as SK hynix, Samsung, and Micron.

Secondly, Meta will have exabytes of cold data following its training runs and these could be thrown away or kept for the long term, which means tape storage, which is cheaper for archives than disk or SSDs.

CIO Insight reported in August 2022 that Meta and other hyperscalers were LTO tape users. It said: “Qingzhi Peng, Meta’s Technical Sourcing Manager, noted that his company operates a large tape archive storage team. ‘Hard disk drive (HDD) technology is like a sprinter, and tape is more like a marathon runner,’ said Peng. ‘We use tape in the cold data tier, flash in the hot tier, and HDD and flash in the warm tier.'”

There is no indication of which supplier, such as Quantum or Spectra Logic, provides Meta’s tape storage, but it could be set for tape library orders as well.