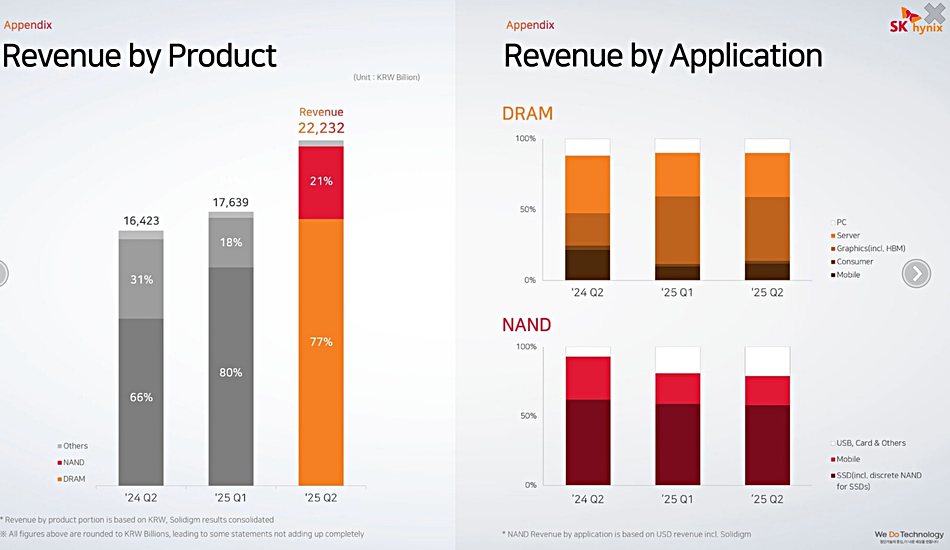

High Bandwidth memory has become a high revenue earner for Korea’s SK hynix as second 2025 quarter revenues jumped 35.4 percent year-on-year to ₩22.23 trillion ($16.23 billion).

There was a ₩7 trillion ($5.1 billion) profit, up 69 .8 percent annually, down 14 percent sequentially, and the company is amassing cash, with its cash and cash equivalents increasing to ₩17 trillion ($12.4 billion) at the end of June, up by ₩2.7 trillion ($1.97 billion) from the prior quarter. Its debt ratio and net debt ratio stood at 25 percent and 6 percent, respectively, as net debt fell by ₩4.1 trillion ($3 billion), compared with the previous quarter. HBM chips provided 77 percent of its second quarter revenue.

Song Hyun Jong, SK hynix President and Head of Corporate Center, said in the earnings call: “Demand for AI memory continued to grow, driven by aggressive AI investments from big tech companies.”

SK hynix said its customers plan to launch new products in the second half and iIt expects to double 2025 HBM revenues compared to 2024. Increasing competition among hi-tech companies to enhance AI model inferencing will lead to higher demand for high-performance and high-capacity memory products. Ongoing investments by nations to build sovereign AI will also help, with Song Hyun Jong saying: “Additionally, ongoing investments by governments and corporations for solving AI are likely to become a new long term driver of AI memory demand.”

AI looks to be the gift that just keeps on giving as AI training needs GPUS with HBM and DRAM, AI inferencing needs servers with GPUs+HBM and DRAM, and AI PC, notebooks, tablets and smartphones will need DRAM and NAND.

The company will start provision of an LPDDR-based DRAM module for servers within this year, and prepare GDDR7 products for AI GPUs with an expanded capacity of 24Gb from 16Gb. HBM4 will come later this year. Song Hyun Jong said: “We are on track to meet our goal as a Full Stack AI Memory Provider satisfying customers and leading market expansion through timely launch of products with best-in-class quality and performance required by the AI ecosystem.”

NAND looks a tad less exciting, as SK hynix will “maintain a prudent stance for investments considering demand conditions and profitability-first discipline, while continuing with product developments in preparation for improvements in market conditions.” It will expand sales of QLC-based high-capacity eSSDs and build a 321-layer NAND product portfolio.

Kioxia/Sandisk’s BiCS10 NAND has 332 layers with Samsung’s V10 NAND promising 400+, up from its current (V9) 286 layers. Micro is at 276 with its G9 NAND and YMTC at 232 with its Gen5 technology. SK hynix’s Solidigm subsidiary has 192-layer product and looks in need of a substantial layer count jump if it is to stay relevant. It builds its NAND with a different fab and design from SK hynix and, unless it adopts SK hynix 321-layer tech, will have to devize its own at considerable expense. Whichever route it takes, adopt SK hynix tech or go-it-alone, it will have to equip fabs with new tools. Using SK hynix tech would potentially save a lot of development expense and a 321-layer product would give it an up-front 67 percent increase in die capacity over its 192-layer product.

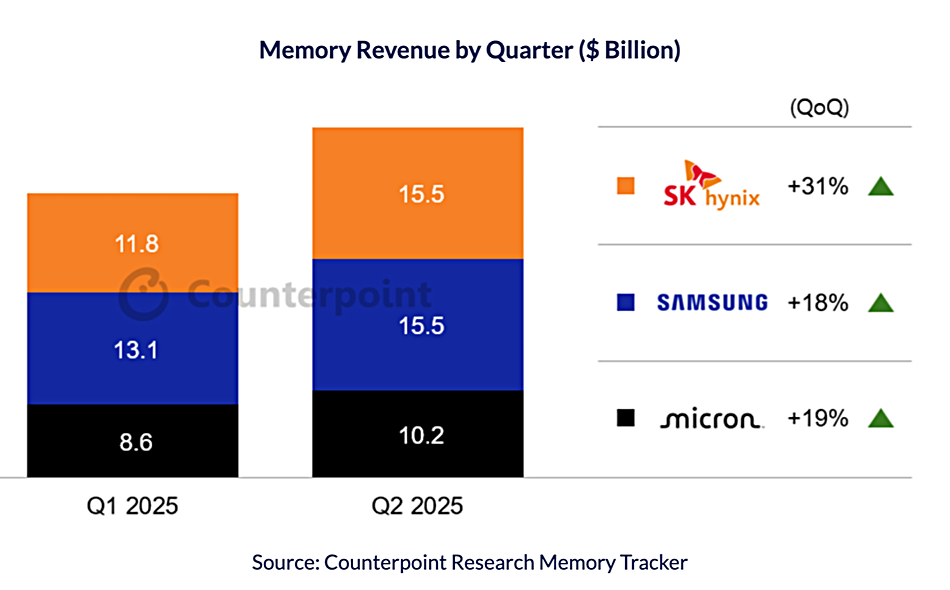

Overall SK hynix has grown its share of the DRAM and NAND market, with Counterpoint Research estimating it is now neck and neck with Samsung for combined DRAM and NAND revenues in the second quarter at the $15.5 billion mark.

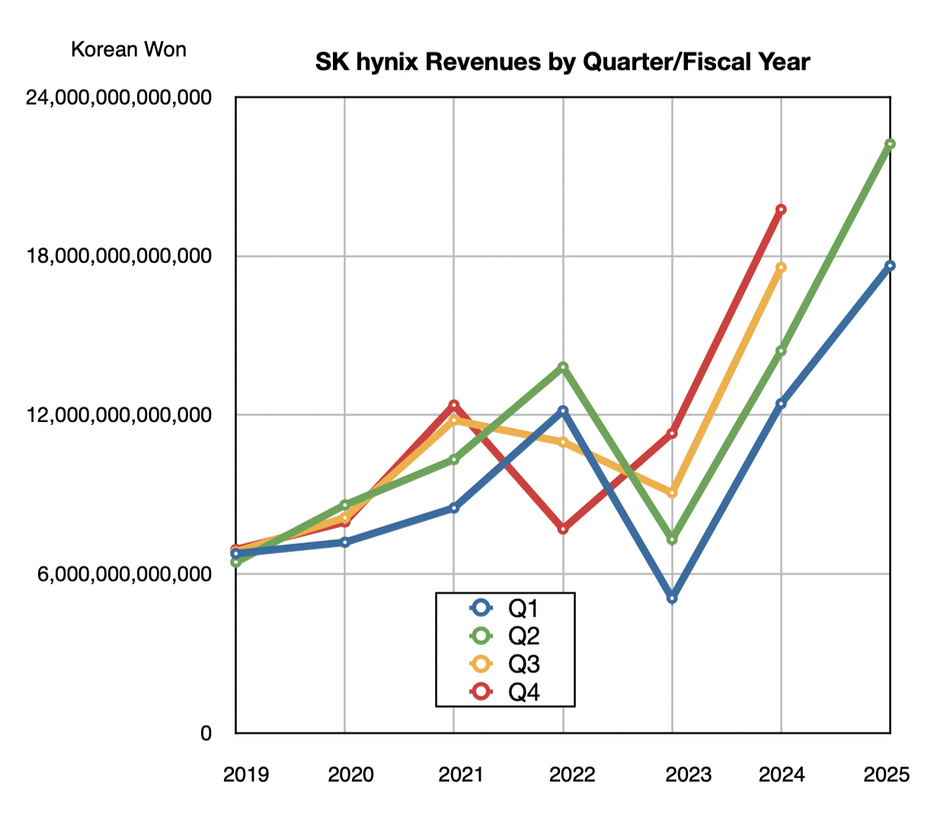

Counterpoint Senior Analyst Jeongku Choi said, “SK hynix saw its largest-ever quarterly loss (₩3.4 trillion or $2.7 billion) in Q1 2023, prompting painful decisions such as production cuts. However, backed by world-class technology, the company began a remarkable turnaround, kick-started by the world’s first mass production of HBM3E in Q1 2024. By Q1 2025, SK hynix had claimed the top spot in global DRAM revenue, and just one quarter later, it is now competing head-to-head with Samsung for leadership in the overall memory market.”